Introduction

OCR on documents remains a challenging task. While printed text can often be recognized with 95% or higher accuracy, real-world documents — containing handwriting, non-standard layouts, and other irregularities — are still much harder to read accurately. There are high quality systems solving documents reading in sub-tasks: OCR, layout analysis, structure recognition, classification.

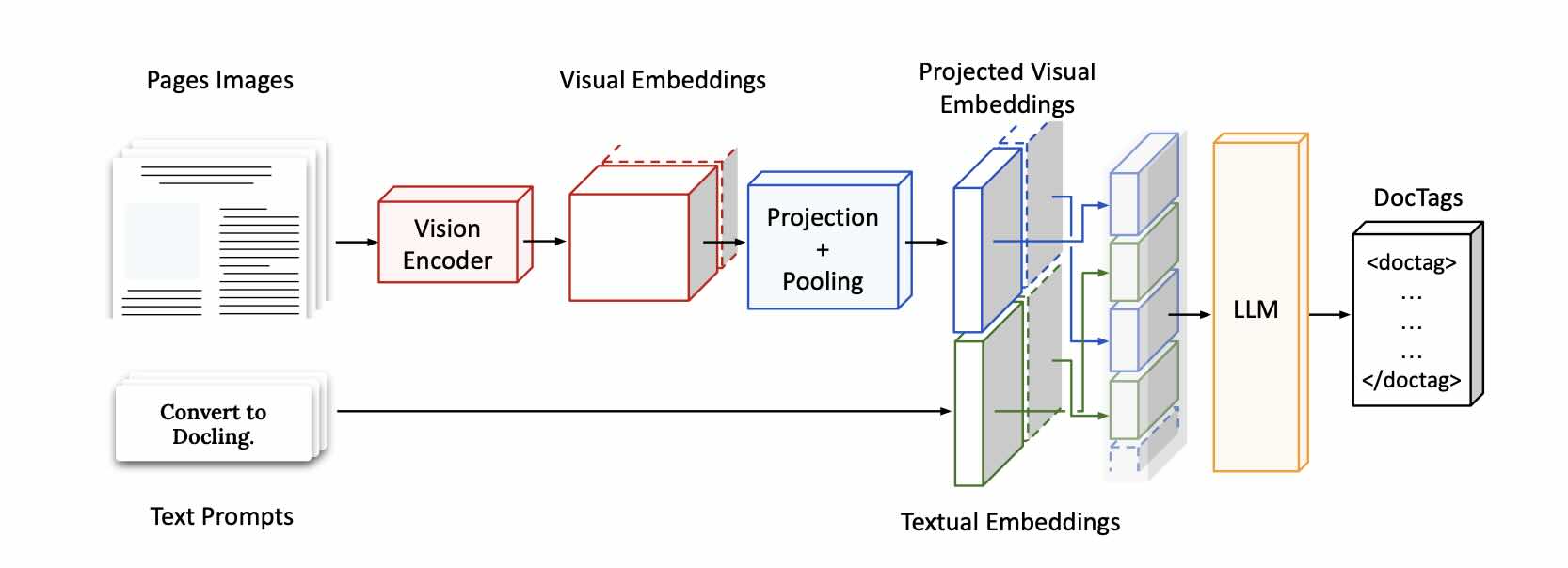

Recent trend is using large vision-language models to solve whole convertion task in one shot, and giving the user opportunity to define additional specific tasks to do in the prompt.

This post is about SmalDocling, a very tiny and compute-effient vision-language model doing the convertion task and the instruction task written into a prompt. The post is based on SmolDocling research paper along with some personal experiences from working with it.

Architecture

SmolDocling model comes from family of Hugging Face’s SmolVLM. It was trained on datasets allowing for recognition of captions, charts, forms, code, equations, tables, footnotes, lists, page footers & headers, section headings, and text. SmolDocling does OCR on elements mentioned and recognizes the type and location. And here we have main task of SmolDocling - conversion and docuemnt understatning.

The red cube named as Vision Encoder on the picture above is the image encoder used in SomlVM models, SigLIP-base path-16/512 (93M). It comes from Google’s CLIP-style image encoders — replacing CLIP’s contrastive softmax loss with a sigmoid cross-entropy loss (this change makes training more stable and more accurate when matching images and text). Its superpowers are low-memory and fast inference. Those superpowers are used here for multimodal reasoning tasks.

Usage

We can get bigger SmolDocling model (), or large vision-language models to quickly get higher accuracy but also ‘heavier’ inference and so much bigger usage of compute. SmolDocling can find right niche for deployments on edge devices or on any resource-constrained setting. Another usage is quick prototyping and experimentation, it’s always better to start with small and quick models and avoid complexity that comes with a size.

[read the memory usage during inference is it below or above 1 GB?]

DocTags

Another interesting thing is a standard proposed by smolDocling model - DocTags. It is created to use efficiently in inference and to train VLMs in a standardized way. HTML and Mardown formats are ambigous and by do not keep document layout context. DocTags separates text content from layout of document which bring clarity. DocTags has also clear and concise format which saves tokens and thus, inference and training on VLMs. See the basic example:

HTML:

<h1>Invoice</h1><p>Customer Name: John Doe</p>

~20–25 tokens.

DocTags:

<heading>Invoice</heading><para>Customer Name: John Doe</para>

~12–15 tokens.

DocTags leveraged OTLS standard and its full vocabulary. OTSL stands for Optimized Table Structure Language, and it’s specialized markup language designed for keeping table structure information. This choise also bring clarity and saves tokens.

Pre-training datasets

Seeing lack of good multimodal document data SmolDocling team created new public data set - DocLayNet-PT. It contains 1.4M pages from DocFM dataset (PDF documents from CommonCrawl, Wikipedia, business domains). Original SmolVLM had DocVQA capabilities (Document Visual Question Answering). To keep this feature the smolDocling was trained on Docmatix dataset with added DocTags format information.